Artificial Intelligence (AI) is transforming industries, redefining business models, and reshaping how decisions are made. From automating financial transactions to diagnosing diseases and managing cybersecurity threats, AI’s influence continues to expand. However, as AI becomes more embedded in critical systems, its potential risks—bias, opacity, privacy violations, and ethical misuse—demand deliberate oversight. This is where AI Governance and Risk Management step in.

The Imperative for AI Governance

AI governance refers to the framework of policies, processes, and controls designed to ensure that AI systems are ethical, transparent, and accountable. Without governance, organizations risk deploying models that inadvertently harm individuals, perpetuate bias, or violate regulations.

Good governance provides clarity on three key questions:

- Who is responsible for AI decisions?

- How are those decisions made?

- What safeguards are in place to prevent unintended outcomes?

In essence, AI governance builds trust—not just in the technology, but in the organizations deploying it. Trust is the foundation of public acceptance and long-term success for AI innovation.

Understanding AI Risk Management

AI risk management focuses on identifying, assessing, and mitigating risks throughout the AI lifecycle—from design and data collection to deployment and monitoring. Unlike traditional IT systems, AI introduces dynamic and context-dependent risks that evolve as models learn and adapt.

Some of the most common AI risks include:

- Bias and fairness issues: Discriminatory outcomes arising from unrepresentative or skewed training data.

- Lack of transparency: “Black-box” models that make decisions no one fully understands.

- Security vulnerabilities: Model poisoning or adversarial attacks that manipulate AI behavior.

- Regulatory non-compliance: Breaches of data protection laws, such as GDPR or emerging AI-specific regulations.

- Ethical concerns: Misuse of AI for surveillance, misinformation, or discrimination.

A robust AI risk management framework should combine technical safeguards (like explainability tools and adversarial testing) with organizational measures (such as ethical review boards, audit trails, and accountability structures).

Building a Framework for Responsible AI

Organizations seeking to operationalize AI governance can adopt structured frameworks inspired by existing standards such as:

- NIST AI Risk Management Framework (AI RMF): Offers principles and guidelines for managing AI risks.

- ISO/IEC 42001 (AI Management System): Provides requirements for establishing, implementing, and maintaining AI governance processes.

- OECD AI Principles: Emphasize human-centered and trustworthy AI.

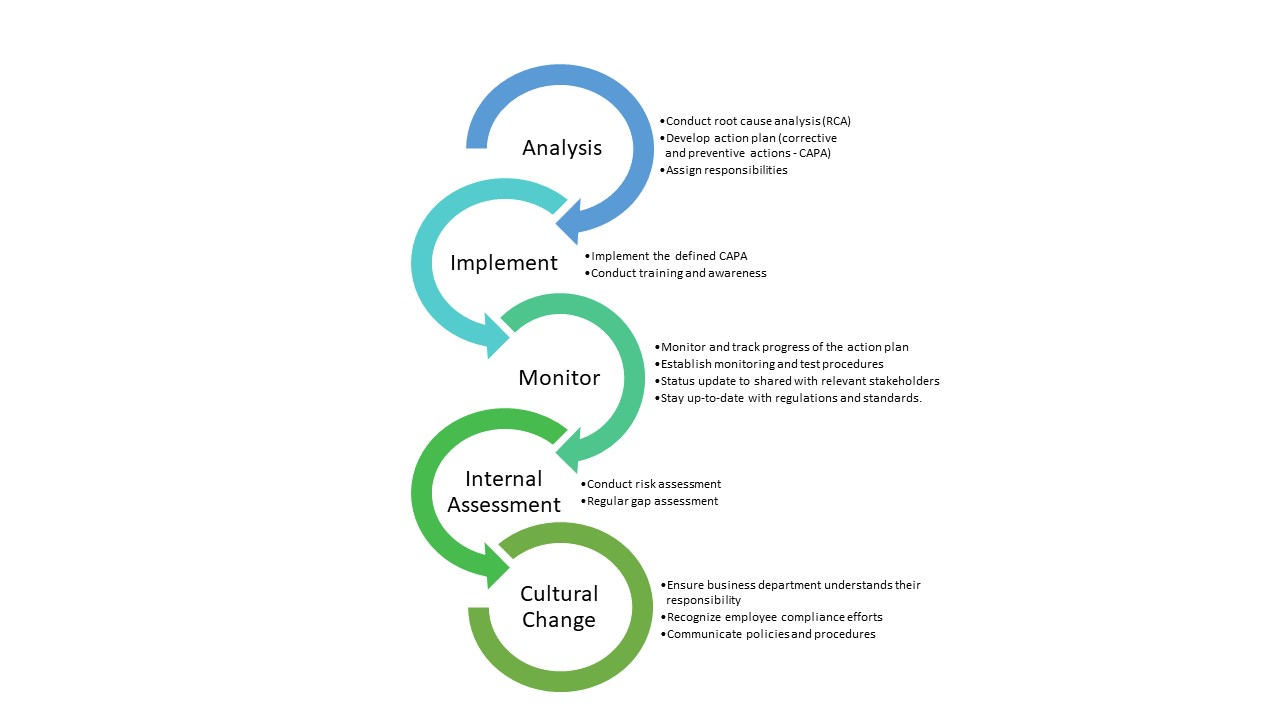

To mitigate Key risks in AI deployments such as Algorithms bias, model opacity, data privacy violations and regulatory non-compliance, organizations should adopt a structured approach:

- Establish AI governance policies aligned with business ethics and legal standards

- Conduct risk assessments across the AI lifecycle—from data sourcing to model deployment

- Implement explainability tools to make AI decisions interpretable

- Monitor AI performance continuously for drift, bias, and anomalies

- Engage cross-functional teams including legal, compliance, cybersecurity, and data science

A comprehensive AI governance framework should include:

- Clear policies defining acceptable AI use.

- Data governance controls to ensure data quality, privacy, and fairness.

- Model validation and monitoring to track performance drift and detect anomalies.

- Human oversight mechanisms for high-impact decisions.

- Documentation and transparency for accountability and regulatory audits.

The Human Factor: Ethics and Accountability

AI governance isn’t purely a technical challenge—it’s a human one. Ethical considerations must guide every stage of AI development and deployment. Governance frameworks should ensure that:

- Decisions remain human-centric, with humans retaining the “final say.”

- Developers and executives are accountable for outcomes.

- Ethical principles like justice, beneficence, and non-maleficence are embedded in practice.

Accountability also means maintaining transparency with stakeholders—users should know when they’re interacting with AI and how their data is used.

Building Trust Through Transparency and Collaboration

To build public trust, organizations must adopt a “trust-by-design” approach—embedding transparency, explainability, and fairness into AI systems from the start. Cross-functional collaboration between data scientists, compliance officers, legal experts, and ethicists ensures balanced oversight.

Additionally, open dialogue with regulators, industry peers, and civil society promotes responsible innovation. The goal isn’t to stifle AI development but to guide it toward outcomes that benefit society safely and fairly.

Conclusion

As AI becomes a cornerstone of digital transformation, governance and risk management are not optional—they are essential. Governing the algorithm means balancing innovation with responsibility, efficiency with ethics, and automation with accountability.

Building trust in AI systems requires a commitment to transparency, fairness, and human oversight. Organizations that embrace these principles will not only mitigate risk but also gain a competitive advantage—by proving that their AI systems deserve the confidence of the people they serve.

I love how well-organized and detailed this post is.